Question or problem about Python programming:

Is it possible to get the results of a test (i.e. whether all assertions have passed) in a tearDown() method? I’m running Selenium scripts, and I’d like to do some reporting from inside tearDown(), however I don’t know if this is possible.

Today we will learn about python unittest and look through python unit test example programs. In previous tutorial we learned about python zip function. Python unittest. Python unittest module is used to test a unit of source code. Suppose, you need to test your project. You know what kind of data the function will return.

- Python’s mock library, if a little confusing to work with, is a game-changer for unit-testing. We’ve demonstrated common use-cases for getting started using mock in unit-testing, and hopefully this article will help Python developers overcome the initial hurdles and write excellent, tested code.

- Python -m unittest runner or python runner.py. Triangles on November 08, 2019 at 11:10 @Manohar runner.py is a regular script, so `python runner.py` should be enough.

- Python -m unittest runner or python runner.py. Triangles on November 08, 2019 at 11:10 @Manohar runner.py is a regular script, so `python runner.py` should be enough.

How to solve the problem:

Solution 1:

CAVEAT: I have no way of double checking the following theory at the moment, being away from a dev box. So this may be a shot in the dark.

Perhaps you could check the return value of sys.exc_info() inside your tearDown() method, if it returns (None, None, None), you know the test case succeeded. Otherwise, you could use returned tuple to interrogate the exception object.

See sys.exc_info documentation.

Another more explicit approach is to write a method decorator that you could slap onto all your test case methods that require this special handling. This decorator can intercept assertion exceptions and based on that modify some state in self allowing your tearDown method to learn what’s up.

Solution 2:

This solution works for Python versions 2.7 to 3.9 (the highest current version), without any decorators or other modification in any code before tearDown. Everything works according to the builtin classification of results. Also skipped tests or expectedFailure are recognized correctly. It evaluates the result of the current test, not a summary of all tests passed so far. Compatible also with pytest.

Comments: Only one or zero exceptions (error or failure) need be reported because not more can be expected before tearDown. The package unittest expects that a second exception can be raised by tearDown. Therefore the lists errors and failures can contain only one or zero elements together before tearDown. Lines after “demo” comment are reporting a short result.

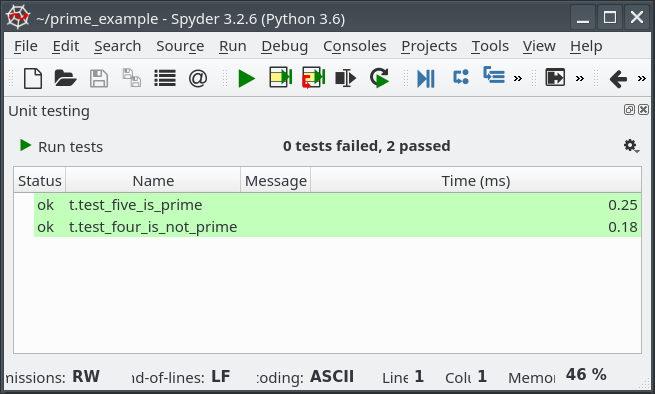

Demo output: (not important)

Comparision to other solutions – (with respect to commit history of Python source repository):

This solution uses a private attribute of TestCase instance like many

other solutions,

but I checked carefully all relevant commits in the Python source repository

that three alternative names cover the code history since Python

2.7 to 3.6.2 without any gap. It can be a problem after some new major

Python release, but it could be clearly recognized, skipped and easily fixed

later for a new Python. An advantage is that nothing is modified before

running tearDown, it should never break the test and all functionality of

unittest is supported, works with pytest and it could work many extending packages, but not with nosetest (not a suprise becase nosetest is not compatible e.g. with unittest.expectedFailure).The solutions with decorators on the user test methods or with a customized

failureException (mgilson, Pavel Repin 2nd way, kenorb) are robust against

future Python

versions, but if everything should work completely, they would grow like a

snow ball with more supported exceptions and more replicated internals

of unittest. The decorated functions have less readable tracebacks

(even more levels added by one decorator), they are more complicated for

debugging and it is unpleassant if another more important decorator

has a problem. (Thanks to mgilson the basic functionality is ready and known

issues can be fixed.)The solution with modifired

runmethod and catchedresult

parameter- (scoffey) should work

also for Python 2.6. The interpretation of results can be improved to

requirements of the question, but nothing can work in Python 3.4+,

becauseresultis updated after tearDown call, never before.

- (scoffey) should work

Mark G.: (tested with Python 2.7, 3.2, 3.3, 3.4 and with nosetest)

solution by

exc_info()(Pavel Repin 2st way) works only with Python 2.Other solutions are principially similar, but less complete or with more

disadvantages.

Explained by Python source repository

= Lib/unittest/case.py =

Python v 2.7 – 3.3

Python v. 3.4 – 3.6

Note (by reading Python commit messages): A reason why test results are so much decoupled from tests is memory leaks prevention. Every exception info can access to frames of the failed process state including all local variables. If a frame is assigned to a local variable in a code block that could also fail, then a cross memory refence could be easily created. It is not terrible, thanks to garbage collector, but the free memory can became fragmented more quickly than if the memory would be released correctly. This is a reason why exception information and traceback are converted very soon to strings and why temporary objects like self._outcome are encapsulated and are set to None in a finally block in order to memory leaks are prevented.

Solution 3:

If you take a look at the implementation of unittest.TestCase.run, you can see that all test results are collected in the result object (typically a unittest.TestResult instance) passed as argument. No result status is left in the unittest.TestCase object.

So there isn’t much you can do in the unittest.TestCase.tearDown method unless you mercilessly break the elegant decoupling of test cases and test results with something like this:

EDIT: This works for Python 2.6 – 3.3,

(modified for new Python bellow).

Solution 4:

If you are using Python2 you can use the method _resultForDoCleanups. This method return a TextTestResult object:

<unittest.runner.TextTestResult run=1 errors=0 failures=0>

You can use this object to check the result of your tests:

If you are using Python3 you can use _outcomeForDoCleanups:

Solution 5:

It depends what kind of reporting you’d like to produce.

In case you’d like to do some actions on failure (such as generating a screenshots), instead of using tearDown(), you may achieve that by overriding failureException.

For example:

Solution 6:

Following on from amatellanes’ answer, if you’re on Python3.4, you can’t use _outcomeForDoCleanups. Here’s what I managed to hack together:

yucky, but it seems to work.

Solution 7:

Here’s a solution for those of us who are uncomfortable using solutions that rely on unittest internals:

First, we create a decorator that will set a flag on the TestCase instance to determine whether or not the test case failed or passed:

This decorator is actually pretty simple. It relies on the fact that unittest detects failed tests via Exceptions. As far as I’m aware, the only special exception that needs to be handled is unittest.SkipTest (which does not indicate a test failure). All other exceptions indicate test failures so we mark them as such when they bubble up to us.

We can now use this decorator directly:

It’s going to get really annoying writing this decorator all the time. Is there a way we can simplify? Yes there is!* We can write a metaclass to handle applying the decorator for us:

Now we apply this to our base TestCase subclass and we’re all set:

There are likely a number of cases that this doesn’t handle properly. For example, it does not correctly detect failed subtests or expected failures. I’d be interested in other failure modes of this, so if you find a case that I’m not handling properly, let me know in the comments and I’ll look into it.

*If there wasn’t an easier way, I wouldn’t have made _tag_error a private function 😉

Solution 8:

Python 2.7.

You can also get result after unittest.main():

or use suite:

Solution 9:

Inspired by scoffey’s answer, I decided to take mercilessnes to the next level, and have come up with the following.

It works in both vanilla unittest, and also when run via nosetests, and also works in Python versions 2.7, 3.2, 3.3, and 3.4 (I did not specifically test 3.0, 3.1, or 3.5, as I don’t have these installed at the moment, but if I read the source code correctly, it should work in 3.5 as well):

When run with unittest:

When run with nosetests:

Background

I started with this:

However, this only works in Python 2. In Python 3, up to and including 3.3, the control flow appears to have changed a bit: Python 3’s unittest package processes resultsafter calling each test’s tearDown() method… this behavior can be confirmed if we simply add an extra line (or six) to our test class:

Then just re-run the tests:

…and you will see that you get this as a result:

Now, compare the above to Python 2’s output:

Since Python 3 processes errors/failures after the test is torn down, we can’t readily infer the result of a test using result.errors or result.failures in every case. (I think it probably makes more sense architecturally to process a test’s results after tearing it down, however, it does make the perfectly valid use-case of following a different end-of-test procedure depending on a test’s pass/fail status a bit harder to meet…)

Therefore, instead of relying on the overall result object, instead we can reference _outcomeForDoCleanups as others have already mentioned, which contains the result object for the currently running test, and has the necessary errors and failrues attributes, which we can use to infer a test’s status by the time tearDown() has been called:

This adds support for the early versions of Python 3.

As of Python 3.4, however, this private member variable no longer exists, and instead, a new (albeit also private) method was added: _feedErrorsToResult.

This means that for versions 3.4 (and later), if the need is great enough, one can — very hackishly — force one’s way in to make it all work again like it did in version 2…

…provided, of course, all consumers of this class remember to super(…, self).tearDown() in their respective tearDown methods…

Disclaimer: Purely educational, don’t try this at home, etc. etc. etc. I’m not particularly proud of this solution, but it seems to work well enough for the time being, and is the best I could hack up after fiddling for an hour or two on a Saturday afternoon…

Solution 10:

I think the proper answer to your question is that there isn’t a clean way to get test results in tearDown(). Most of the answers here involve accessing some private parts of the python unittest module and in general feel like workarounds. I’d strongly suggest avoiding these since the test results and test cases are decoupled and you should not work against that.

If you are in love with clean code (like I am) I think what you should do instead is instantiating your TestRunner with your own TestResult class. Then you could add whatever reporting you wanted by overriding these methods:

Hope this helps!

This is an update to Doug Hellman's excellent PyMOTW article found here:

The code and examples here have been updated by Corey Goldberg to reflect Python 3.3.

further reading:

unittest - Automated testing framework

Python's unittest module, sometimes referred to as 'PyUnit', is based on the XUnit framework design by Kent Beck and Erich Gamma. The same pattern is repeated in many other languages, including C, Perl, Java, and Smalltalk. The framework implemented by unittest supports fixtures, test suites, and a test runner to enable automated testing for your code.

Basic Test Structure

Tests, as defined by unittest, have two parts: code to manage test 'fixtures', and the test itself. Individual tests are created by subclassing TestCase and overriding or adding appropriate methods. For example,

In this case, the SimplisticTest has a single test() method, which would fail if True is ever False.

Running Tests

The easiest way to run unittest tests is to include:

at the bottom of each test file, then simply run the script directly from the command line:

This abbreviated output includes the amount of time the tests took, along with a status indicator for each test (the '.' on the first line of output means that a test passed). For more detailed test results, include the -v option:

Test Outcomes

Tests have 3 possible outcomes:

The test passes.

How To Test Python Code

The test does not pass, and raises an AssertionError exception.

The test raises an exception other than AssertionError.

There is no explicit way to cause a test to 'pass', so a test's status depends on the presence (or absence) of an exception.

When a test fails or generates an error, the traceback is included in the output.

In the example above, test_fail() fails and the traceback shows the line with the failure code. It is up to the person reading the test output to look at the code to figure out the semantic meaning of the failed test, though. To make it easier to understand the nature of a test failure, the assert*() methods all accept an argument msg, which can be used to produce a more detailed error message.

Asserting Truth

Most tests assert the truth of some condition. There are a few different ways to write truth-checking tests, depending on the perspective of the test author and the desired outcome of the code being tested. If the code produces a value which can be evaluated as true, the method assertTrue() should be used. If the code produces a false value, the method assertFalse() makes more sense.

Assertion Methods

The TestCase class provides a number of methods to check for and report failures:

Common Assertions

| Method |

|---|

assertTrue(x, msg=None) |

assertFalse(x, msg=None) |

assertIsNone(x, msg=None) |

assertIsNotNone(x, msg=None) |

assertEqual(a, b, msg=None) |

assertNotEqual(a, b, msg=None) |

assertIs(a, b, msg=None) |

assertIsNot(a, b, msg=None) |

assertIn(a, b, msg=None) |

assertNotIn(a, b, msg=None) |

assertIsInstance(a, b, msg=None) |

assertNotIsInstance(a, b, msg=None) |

Other Assertions

| Method |

|---|

assertAlmostEqual(a, b, places=7, msg=None, delta=None) |

assertNotAlmostEqual(a, b, places=7, msg=None, delta=None) |

assertGreater(a, b, msg=None) |

assertGreaterEqual(a, b, msg=None) |

assertLess(a, b, msg=None) |

assertLessEqual(a, b, msg=None) |

assertRegex(text, regexp, msg=None) |

assertNotRegex(text, regexp, msg=None) |

assertCountEqual(a, b, msg=None) |

assertMultiLineEqual(a, b, msg=None) |

assertSequenceEqual(a, b, msg=None) |

assertListEqual(a, b, msg=None) |

assertTupleEqual(a, b, msg=None) |

assertSetEqual(a, b, msg=None) |

assertDictEqual(a, b, msg=None) |

Failure Messages

These assertions are handy, since the values being compared appear in the failure message when a test fails.

And when these tests are run:

All the assert methods above accept a msg argument that, if specified, is used as the error message on failure.

Testing for Exceptions (and Warnings)

The TestCase class provides methods to check for expected exceptions:

| Method |

|---|

assertRaises(exception) |

assertRaisesRegex(exception, regexp) |

assertWarns(warn, fun, *args, **kwds) |

assertWarnsRegex(warn, fun, *args, **kwds) |

As previously mentioned, if a test raises an exception other than AssertionError it is treated as an error. This is very useful for uncovering mistakes while you are modifying code which has existing test coverage. There are circumstances, however, in which you want the test to verify that some code does produce an exception. For example, if an invalid value is given to an attribute of an object. In such cases, assertRaises() makes the code more clear than trapping the exception yourself. Compare these two tests:

The results for both are the same, but the second test using assertRaises() is more succinct.

Test Fixtures

Fixtures are resources needed by a test. For example, if you are writing several tests for the same class, those tests all need an instance of that class to use for testing. Other test fixtures include database connections and temporary files (many people would argue that using external resources makes such tests not 'unit' tests, but they are still tests and still useful). TestCase includes a special hook to configure and clean up any fixtures needed by your tests. To configure the fixtures, override setUp(). To clean up, override tearDown().

Cached

When this sample test is run, you can see the order of execution of the fixture and test methods:

Python Unit Test Runner Gui

Test Suites

Python Unittest Run Specific Test

The standard library documentation describes how to organize test suites manually. I generally do not use test suites directly, because I prefer to build the suites automatically (these are automated tests, after all). Automating the construction of test suites is especially useful for large code bases, in which related tests are not all in the same place. Tools such as nose make it easier to manage tests when they are spread over multiple files and directories.